2020. 2. 7. 08:38ㆍ카테고리 없음

Also I think all this discussion about template and generics totally misses the point about meta-programming. It is not about just code generation or replacing few type declarations, main thing is compile-time reflection. The fact we use templates is just a mere implementation details.

What important is being able to express complicated abstract relations between parts of your program and allowing compiler to both verify it and optimize based on that information. Boilerplate elimination without such verification is not even closely as tempting. On Wednesday, 18 June 2014 at 16:55:53 UTC, Dicebot wrote: On Wednesday, 18 June 2014 at 16:19:25 UTC, c0de517e wrote: But as I wrote I doubt that people will think at a point that yes, now D is 100% a better version of C/Java/younameit, let's switch. I don't think it's how things go, I think successful languages find one thing a community really can't live without and get adopted there and from there expand. JavaScript is horribly broken, but some people really needed to be able to put code client-side on web pages, so now JS is everywhere.

There are a variety of ways to see all applications or programs which are running on a Mac, ranging from only seeing “windowed” apps running in the graphical front end, to revealing even the most obscure system-level processes and tasks running at the core of OS X. A fast and clever hex editor for Mac OS X 1214 Objective-C. Install and debug iPhone apps from the command line, without using Xcode 426 C. My C implementation of John Gruber's Markdown markup language 456 C. A static source code analysis tool to improve quality and reduce defects for C, C++ and Objective-C.

I think this is actually a flawed mentality that causes a lot of long-term problems to all programmers. By resisting to switch to languages simply because those are good we inevitably get to the point of switching because it is forced by some corporation that has bucks to create an intrusive ecosystem.

And despite the fact language itself can be horrible no choice remains by then. This is, but that's how it works nevertheless.

You don't succeed by arguing what the reality should be, but by accepting what it is and act accordingly. I think this is actually a flawed mentality that causes a lot of long-term problems to all programmers. By resisting to switch to languages simply because those are good we inevitably get to the point of switching because it is forced by some corporation that has bucks to create an intrusive ecosystem. And despite the fact language itself can be horrible no choice remains by then. This is, but that's how it works nevertheless.

You don't succeed by arguing what the reality should be, but by accepting what it is and act accordingly. When I write that engineers have to understand how market works it's not that I don't understand what's technically good and bad, but that's not how things become successful. And there's nothing wrong with the fact that soft factors matter more than technical perfection, at all, because we make machines and programs for people, not to look at how pretty they seem. On 6/18/2014 3:09 PM, c0de517e wrote: On Wednesday, 18 June 2014 at 18:18:28 UTC, Dicebot wrote: On Wednesday, 18 June 2014 at 18:17:03 UTC, deadalnix wrote: This is, but that's how it works nevertheless. You don't succeed by arguing what the reality should be, but by accepting what it is and act accordingly.

Being ashamed of it instead of glorifying such attitude is one way to motivate a change:) You can't fight human psychology, but if you're -really- smart you strive to understand it and work with it. There's a.big. difference between 'human psychology' and 'being an idiot who makes decisions poorly'.

For the former, unconditional acceptance is the only possible option. But for the latter, unconditional acceptance is nothing more than a convenient way to justify (and in effect, encourage) idiocy; it's both self-destructive and entirely avoidable given the actual willingness to avoid it. The belief that 'No amount of improvement is worthwhile unless it comes with some single killer feature' might be common, but it definitely is NOT an immutable aspect of human psychology: It's just plain being an idiot who's trying to rationalize their own laziness and fear of change, instead of doing a programmer's/engineer's JOB of making decisions based on valid reasoning. It's NOT an immutable 'human psychology' belief until someone's DECIDED to rationalize it as such and make excuses for it.

This is something I feel very strongly about. Is is.THE #1. reason the world, and especially the tech sector, has become so pathetically inundated with morons and idiocy: Because instead of fighting and condemning stupidity, it's excused, accepted and even rewarded. That's exactly why so much has gone soooo fucking wrong. On Wednesday, 18 June 2014 at 16:55:53 UTC, Dicebot wrote: On Wednesday, 18 June 2014 at 16:19:25 UTC, c0de517e wrote: But as I wrote I doubt that people will think at a point that yes, now D is 100% a better version of C/Java/younameit, let's switch. I don't think it's how things go, I think successful languages find one thing a community really can't live without and get adopted there and from there expand. JavaScript is horribly broken, but some people really needed to be able to put code client-side on web pages, so now JS is everywhere.

I think this is actually a flawed mentality that causes a lot of long-term problems to all programmers. By resisting to switch to languages simply because those are good we inevitably get to the point of switching because it is forced by some corporation that has bucks to create an intrusive ecosystem.

And despite the fact language itself can be horrible no choice remains by then. Specially important in systems programming languages, as the majority of developers only use what is available on the OS/Hardware vendors SDK. On Wednesday, 18 June 2014 at 19:09:08 UTC, c0de517e wrote: On Wednesday, 18 June 2014 at 18:18:28 UTC, Dicebot wrote: On Wednesday, 18 June 2014 at 18:17:03 UTC, deadalnix wrote: This is, but that's how it works nevertheless.

You don't succeed by arguing what the reality should be, but by accepting what it is and act accordingly. Being ashamed of it instead of glorifying such attitude is one way to motivate a change:) You can't fight human psychology, but if you're -really- smart you strive to understand it and work with it. No, this is what is what you do to pretend to be smart and pragmatical person, an approach so popularized by modern culture I sometimes start thinking it is intentional. You see, while fighting human psychology (actually 'mentality' is correct term here I think) definitely does not work, influencing it is not only possible but in fact has happened all the time through the human history. Mentality is largely shaped by aggregated culture and any public action you take affects that aggregated culture in some tiny way. You can't force people start thinking in a different way but you can start being an example of a different attitude yourself, popularizing and encouraging it. You can stop referring to that unfortunate trait of mentality as an excuse for not adopting the language in your blog posts - it will do fine without your help.

You can casually mention how much of a wasted efforts and daily inconvenience such attitude causes to your co-workers (in a gentle non-intrusive way!). You can start acting as if mentality is different instead of going the route of imaginary pragmatism. In practice acting intentionally irrational is the only way to break the prisoner's dillema and the way people have influenced the culture and mentality all the time.

It may not change thinking process of contemporary adults but few people doing stupid things here and there can accumulate enough cultural change to influence the future. Considering amount of 'not smart' things I have done through my life by now it must have been totally fucked up.

Failing to notice that indicate that something is fundamentally wrong with popular image of pragmatism. You can casually mention how much of a wasted efforts and daily inconvenience such attitude causes to your co-workers (in a gentle non-intrusive way!). You can start acting as if mentality is different instead of going the route of imaginary pragmatism. In practice acting intentionally irrational is the only way to break the prisoner's dillema and the way people have influenced the culture and mentality all the time. I would fight irrational choices, that's agreeable. But the thing is that the technical plane is not the only thing to consider when making rational choices. It is totally rational to understand that things like proficiency, education, legacy, familiarity, environment, future-proofing affect the decision of which language to use.

It's totally rational, and a reason why adoption needs to climb a much higher barrier than simply noting, oh this is much better, just switch. It's like going to a guitarist and trying to have him switch a guitar he played for his lifetime just saying here, this one has less noise, why are you so irrational, it's clearly better.

On Tuesday, 17 June 2014 at 22:24:06 UTC, c0de517e wrote: Visualization would be a great tool, it's quite surprising if you think about it that we can't in any mainstream debugger just graph over time the state of objects, create UIs and so on. I recently did write a tiny program that does live inspection of memory areas as bitmaps, I needed that to debug image algorithms so it's quite specialized.

But once you do stuff like that it comes natural to think that we should have the ability of scripting visualizers in debuggers and have them update continuously in runtime Man, I expressed similar thoughts years ago. Software pumps data in, operates on it, and pumps new data out: why don't we have proper visualization tools for those data flows?

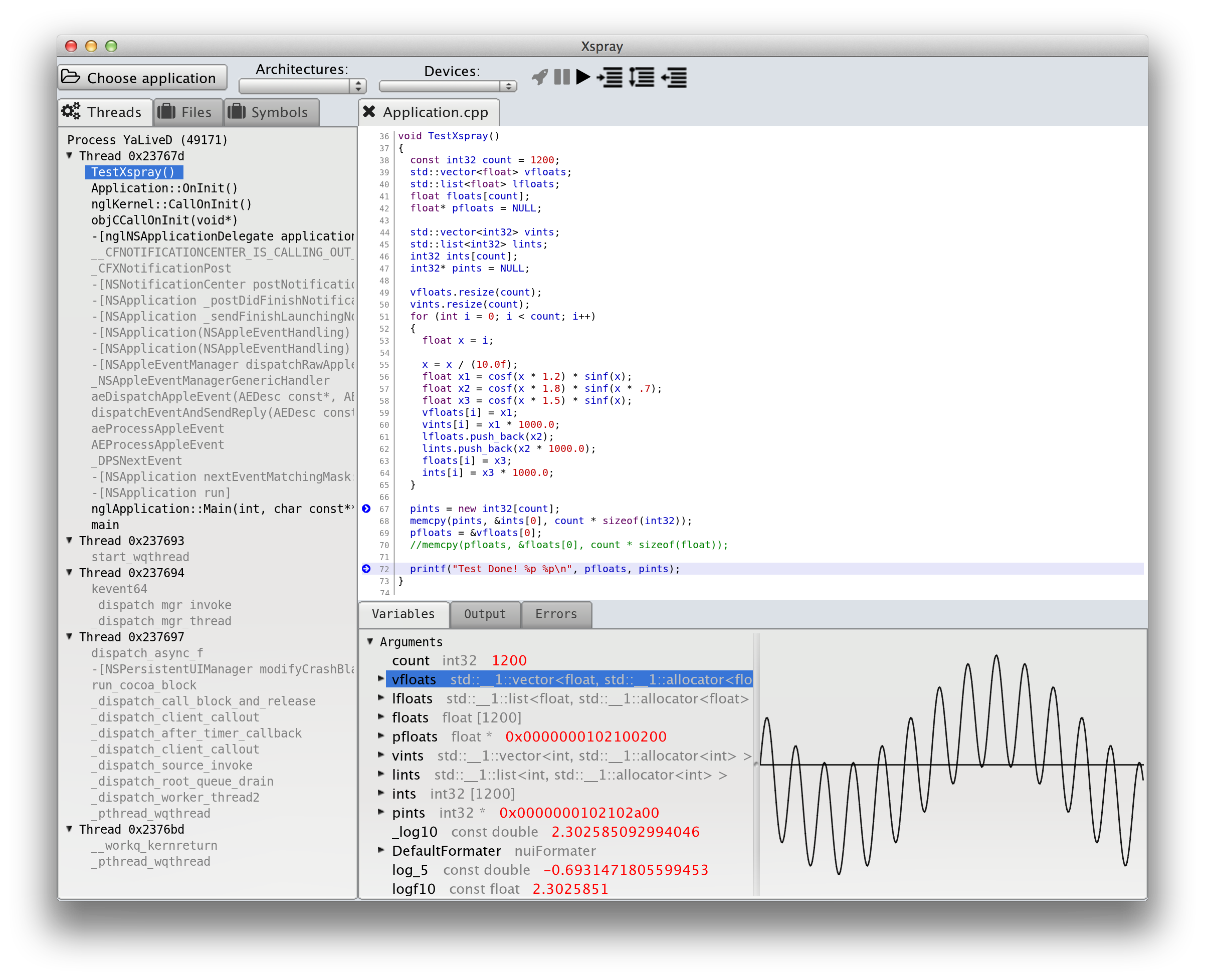

Only being able to freeze program state and inspect it at repeated snapshots in time with a debugger is so backwards: it's like we're still stuck in the '80s. Then, right after I see you mention it too, I happen to run across a recent lldb frontend for OSX/iOS- he gave up on Android;) - that does exactly that.

lldb-dev ANN Xspray - an lldb experiment lldb-dev ANN Xspray - an lldb experiment Sebastien Metrot Wed Jun 4 16:53:18 PDT 2014. Previous message:.

Next message:. Messages sorted by: Hello, TL;DR: Xspray is a prototype for a lldb GUI for MacOSX and iOS. It’s open source. It’s still very basic but it works and It shows some cool ideas about what we could do for native debugging.

Checkit out:. Also, I’m looking for a job. The full story: About one year ago I took some time experimenting with creating a new GUI for lldb.

Meeloo/xspray A Front End For Lldb On Os X For Mac Mac

My idea was to build a debugger that permits to deeply inspect data at runtime. As a developer it is often very frustrating to the IDE stop in the code and not be able to really inspect variables that are big containers (beyond a dozen of floats). When I deal with real time audio, image processing, video, maths, etc., not having access to a more visual representation of my data often makes my life miserable. I also would like to have a complete set of tools to analyse my data: compute the average, the sum, the first derivative of a big vector of float can be life saving. So I started to toy with lldb on the Mac and I developed a prototype to see if my idea was feasible. LLDB is an absolutely amazing project (along with LLVM and Clang) and developing a simple plotter that can inspect std::vector and std::list at runtime was relatively easy on OSX (and your answers to my newbie questions on the list have been very helpful too!).

Making it work with an iOS target proved much harder as there is very little documentation about how Xcode and iTunes installs application and start the debugger there. After much trial an error I succeeded to have that working too. The next idea was to build a complete GUI for LLDB that would work on Android too: native debugging on that plateform is a pure nightmare of horrible scripts and arcane command line instructions. Unfortunately lldb was not at all able to gdb-remote a linux host (even x86 gdb-remote didn’t work at all at the time) and an android debugserver implementation seemed out of question. Which is really a shame as I’m pretty sure game developers (for exemple) would love to have a full blown debugger on that platform. (and one with the features I envisioned would be really very cool). So I stopped working on it (also I was running out of free time).

Unfortunately, I haven’t had much time to really touch my prototype since september 2013 as after giving the idea some thoughts I haven’t found compelling proof that I could make a living by selling this kind of software (nobody wants to launch a separate debugger from Xcode right?). But I thought it was too bad to have all that code lying there doing nothing and helping nobody.

So I have decided to release this small prototype as an open source project on github: Here is a list of some of the features that I wanted to add one day (and I might do it one day): - TimeMachine for debugger: store the value of very variable of every point of the stack trace on each breakpoint stop. The idea is to have a timeline slider that permits to go back in time and still see what happened a couple of loop iteration before the current one, for exemple. (don’t you hate it when you have an error occurring after about 50 iterations of a loop and in your StepOver frenzy you miss the important point and you have to start over again?). With the data collected in the TimeMachine I want to be able to plot the evolution of any variable / class member at a particular breakpoint.

I would call that visual watched variables. statistics and vector operations on the data.

Because in 2014 we can do better than an hexadecimal dump of memory. a memory visualiser that can interpret a chunk of memory as some common data type and visualise it: 8, 16, 24, 32 bit PCM audio, rgb/rgba/grey level pictures, plain text (and variants) with configurable encoding, etc. a plugin system for custom data type visualisation Some features: - load an iOS or MacOSX application - get infos from the iOS devices plugged on the computer - see the list of compiles units - pause/ step over / step in / step out / continue - load C/ObjC/C source and display it with syntax colouring (thanks to libclang). add/remove breakpoints from the source code by clicking in the gutter - list the module dependencies (dylibs, frameworks, etc) and object files.

launch the program on the Mac or iOS device (will as for permission, etc). stop on breakpoints - inspect variables - display a crude plot when you select a variable that contains a std::list, std::vector or array of simple types (signed and unsigned integers of 8, 16, and 32 bits, floats, doubles) - probably more things that I have forgotten - written in C, mostly portable to linux and windows thanks to NUI (could be done in a couple of weeks I guess). the code is still very messy, particularly on the iOS side. Some features are not plugged in the UI yet (iOS devices that were already plugged at startup don’t show up in the UI for exemple). And the UI in itself is far from looking like a finished product, it not even alpha grade in my opinion, but it works.

Dependencies: - lldb / llvm / clang of course - NUI: my multi-platform UI framework on top of OpenGL. I’d love to learn what you think about it. Last but not least: I’m currently looking for a job and I’d love to work on advanced developer tools:-).

If you’d like to hire a passionate senior dev with strong/cool ideas:. Previous message:. Next message:.

Messages sorted.